Install PySpark

1.1 Download Spark package

1.2 Set up SPARK_HOME at ~/.bash_profile

1 | export SPARK_HOME="/Users/mac/Documents/Software/spark-2.1.1-bin-hadoop2.7" |

1.3 Activate SPARK_HOME

1 | source ~/.bash_profile |

Install PyCharm

Create a Python project

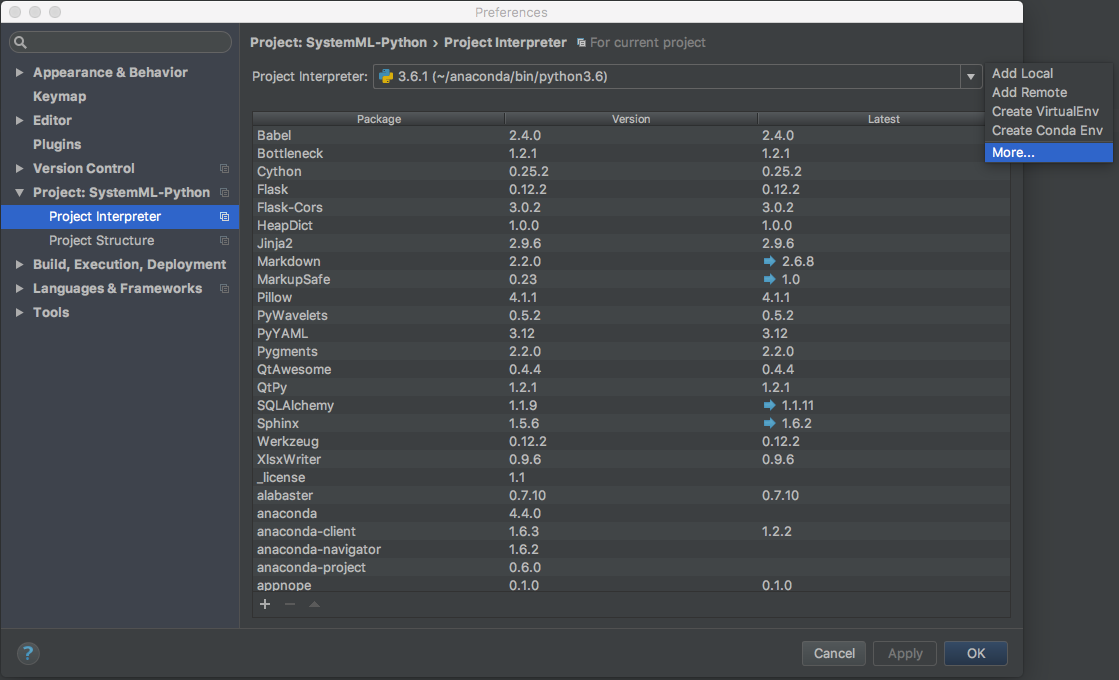

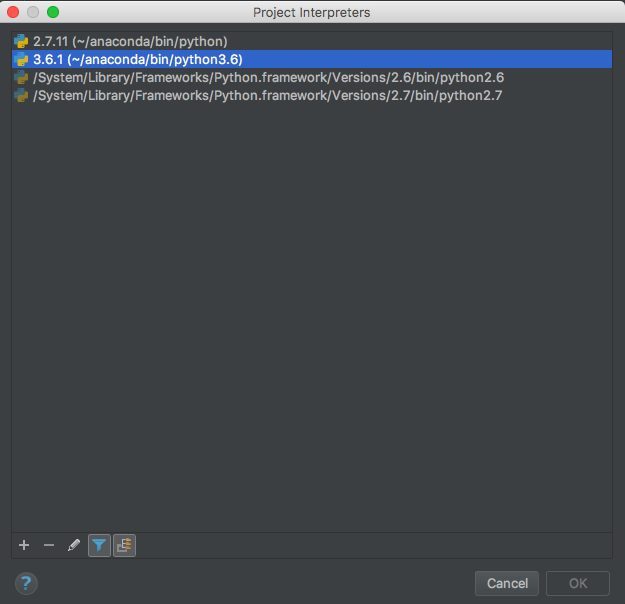

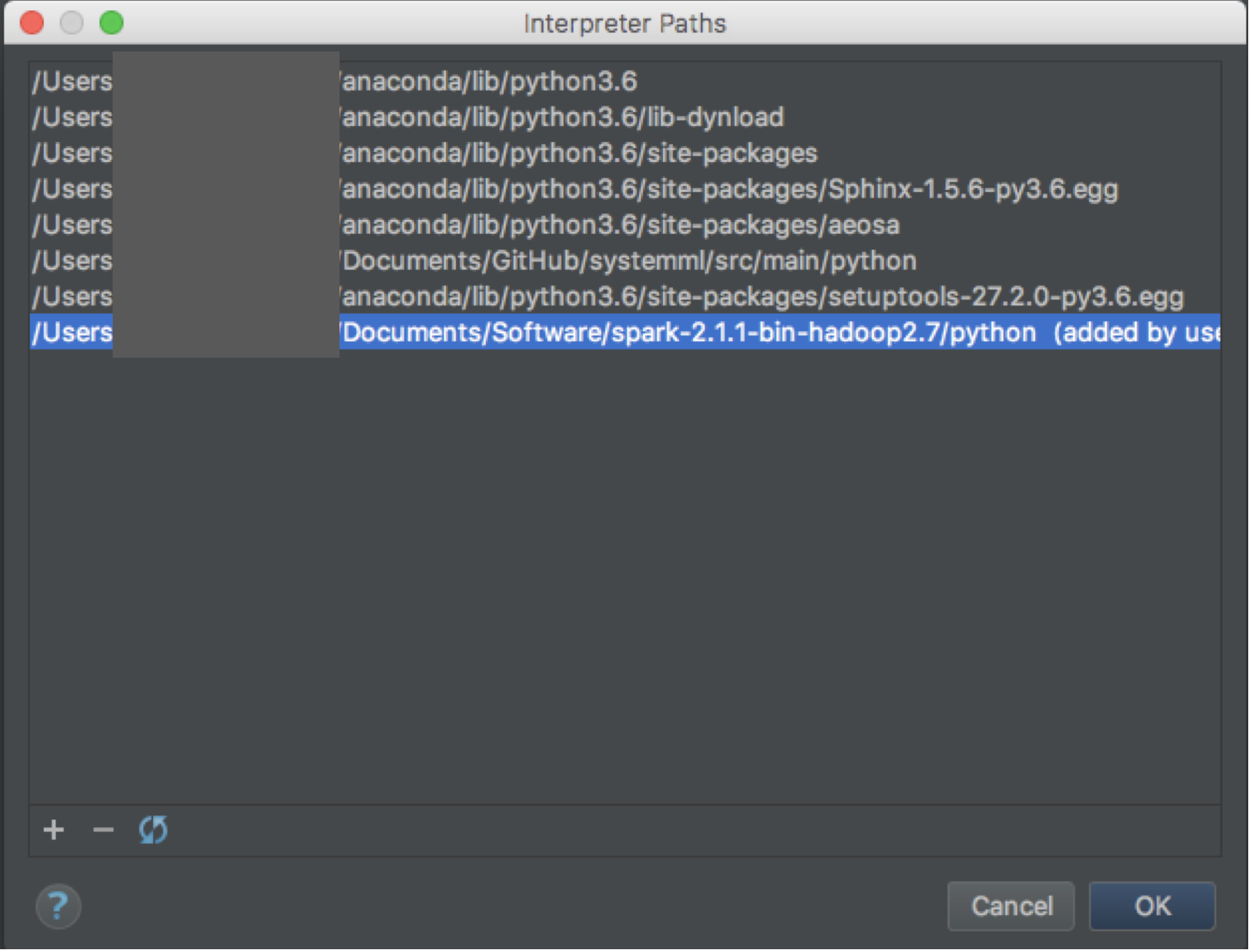

Add PySpark library into the interpreter

Preference -> Project -> Project Interpreter -> Project Interpreter setting (Figure 1) -> Show paths for the selected interpreter (Figure 2) -> Add PySpark library (Figure 3 )

Figure 1:

Figure 2:

Figure 3:

Create a python file to prepare SparkContext & SparkSession

1 | import os |

Import it into the SystemML program at your python file

1 | from src.pyspark_sc import * |